Technology and Society

Post-Post Truth

In the wake of the election, the social media platforms that play such an influential role in our news consumption admitted they have some responsibility for propagating falsehoods and are examining ways to provide greater accountability.

Mark Zuckerberg of Facebook initially denied any responsibility for the accelerated sharing of nonsense and propaganda among like-minded groups, but then reversed course and eventually published what almost reads like a campaign document: a commitment to building infrastructure for groups that are supportive, safe, informed, civically-engaged, and inclusive. Not only should Facebook connect people, it should encourage certain kinds of social behavior. The platform has a platform after all.

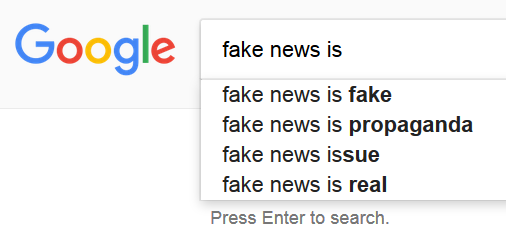

Google, which has run into problems with its “featured snippets” providing answers through its Google Home gadget that are complete fabrications and angering advertisers by placing ads in the company of offensive videos, has rolled out a feature to tag dubious stories with reports from third-party fact-checking organizations and is fiddling with the way people who upload videos to YouTube are rewarded with ad dollars to placate advertisers.

But while the technologies that were designed to capture attention and engage users for the sake of advertising have made it easier for fake news (however that’s defined) to spread, with their architectures encouraging the rise of polarized news environments, technical fixes won’t solve the problem of polarization and distrust. As danah boyd puts it,

Too many people seem to think that you can build a robust program to cleanly define who and what is problematic, implement it, and then presto—problem solved. Yet anyone who has combatted hate and intolerance knows that Band-Aid solutions don’t work. They may make things invisible for a while, but hate will continue to breed unless you address the issues at the source. We need everyone — including companies — to be focused on grappling with the underlying dynamics that are mirrored and magnified by technology.”

Nor can governments pass laws that will fix the problem (though some are trying). And teaching students the art of fact-checking won’t solve the problem, either. It goes deeper than that.

Christopher Douglas has explored the ways far-right Christian organizations that seized a place in politics paved the way for “alternative facts” by not just rejecting “theologically disturbing bodies of knowledge” such as the theory of evolution and historical-critical biblical scholarship but by creating alternative authorities: “they didn’t just question the ideas and conclusions of the secular world and its institutions of knowledge. In a form of resistance, they adapted modern institutions and technologies to create bodies of counter-expertise.” It’s not just a retreat from commonly-held beliefs, its the creation of a separate reality that has the power to question the fundamental legitimacy of science and the academy.

Yochai Benkler, Robert Faris, Hal Roberts, and Ethan Zuckerman showed in a study of what sites people share on social media that political polarization was a pre-existing condition, not a problem caused by the architectures of social media. Those who distrusted traditional media and gravitated to new media sites anchored by Brietbart shared links from that world, unlike Clinton supporters who were much more likely to share news from traditional sources. The authors write:

. . . the fact that these asymmetric patterns of attention were similar on both Twitter and Facebook suggests that human choices and political campaigning, not one company’s algorithm, were responsible for the patterns we observe. These patterns might be the result of a coordinated campaign, but they could also be an emergent property of decentralized behavior, or some combination of both. Our data to this point cannot distinguish between these alternatives.”

Tagging a story on Facebook as dubious based on a third-party fact checking organization is unlikely to persuade believers. Nor will Google’s linking of a story to a Snopes take-down convince those who already believe Snopes is biased and untrustworthy. Some combination of audience and new media, jointly agreeing that old media was not to be trusted, created a powerful political block that not only made it possible to doubt everything, but found ways to punk traditional media. Whitney Phillips, Jessica Beyer, and Gabriella Coleman, who know troll culture, conclude that is the lesson of our polarized news environment.

More than fake news, more than filter bubbles, more than insane conspiracy theories about child sex rings operating out of the backs of Washington DC pizza shops, the biggest media story to emerge from the 2016 election was the degree to which far-right media were able to set the narrative agenda for mainstream media outlets.

What Breitbart did, what conspiratorial far-right radio programs did, what Donald Trump himself did (to say nothing of what the silent contributors to the political landscape did), was ensure that what far-right pundits were talking about became what everyone was talking about, what everyone had to talk about, if they wanted to keep abreast of the day’s news cycle.”

So social media platforms can’t fix this problem; they can make some changes, but it’s not their problem to fix. If underlying divisions have led to the creation of separate and isolated worlds in which we can’t agree on basic facts, what do we do? Alison Head and John Wihbey (director and a board member of Project Information Literacy) see three key actors in tackling the problem: journalists, librarians, and teachers, all of whom have traditions of ethical and fair methods of doing “truth work” which have been challenged in various ways as they curate, share, and shape our information environment.

The online “filter bubble” — the algorithmic world that optimizes for confirmation of our prior beliefs, and maximizes the reach of emotion-triggering content — seems the chief villain in all this. But that’s mistaking symptom for cause. The “filter” that truly matters is a layer deeper; we need to look hard at the cognitive habits that make meaning out of information in the first place. . . .

A response on all three fronts — journalism, libraries, and schools — is essential to beating back this massive assault on truth. Social science tells us that our decisions and judgments depend more on shared community narratives than on individual rationality.”

Whether these traditional institutions, which have to various degrees been declared untrustworthy by those who gravitate to alternative institutions of knowledge, can help us negotiate the wide gap between community narratives that are so very different remains to be seen. But it’s clear the fix won’t be easy, and it won’t be a matter of tweaking algorithms.