4 Unit One, Part Four: Mixing Live Project and Microphone Placement

At this point of the unit, we should have a basic understanding of microphones, mixing consoles, cables, and speakers. Now we need to put those things together to create a “live mix” for a performer or a small group. Whether we have to set up one microphone for a speaking event or 16 separate channels for a full band on a large stage, where we place the microphones and what microphones we choose will quite often determine how well we manage sound for that event. These techniques can be applied to recording sessions or rehearsal spaces as well.

When I work with microphones and a mixing console for a live event, I start by following the general rules of microphone placement and channel strip settings (method). I also try to use the appropriate equipment to fit the specifications of the event and for safety (technical). But I almost always keep an open mind/ear to hear if there are any creative tweaks I can implement to give the overall sound an aesthetic upgrade (creative approach). Often, subtle artistic uses of panning or effects can really heighten the quality of the performance.

General Technique

A microphone should be used whose frequency response will suit the frequency range of the voice or instrument being recorded.

Vary microphone positions and distances until you achieve the monitored sound that you desire.

In the case of poor room acoustics, place the microphone very close to the loudest part of the instrument being recorded or isolate the instrument.

Personal taste is the most important component of microphone technique. Whatever sounds right to you, is right.

Working Distance

Close Miking

When miking at a distance of 1 inch to about 3 feet from the sound source, it is considered close miking. This technique generally provides a tight, present sound quality and does an effective job of isolating the signal and excluding other sounds in the acoustic environment.

Leakage

Leakage occurs when the signal is not properly isolated and the microphone picks up another nearby instrument. This can make the mixdown process difficult if there are multiple voices on one track. Use the following methods to prevent leakage:

Place the microphones closer to the instruments.

Move the instruments farther apart.

Put some sort of acoustic barrier between the instruments.

Use directional microphones.

3 to 1 Rule

The 3:1 distance rule is a general rule of thumb for close miking. To prevent phase anomalies and leakage, the microphones should be placed at least three times as far from each other as the distance between the instrument and the microphone.

Placement for Varying Instruments

Amplifiers

When miking an amplifier, such as for electric guitars, the mic should be placed 2 to 12 inches from the speaker. Exact placement becomes more critical at a distance of less than 4 inches. A brighter sound is achieved when the mic faces directly into the center of the speaker cone and a more mellow sound is produced when placed slightly off-center. Placing off-center also reduces amplifier noise.

Brass Instruments

High sound-pressure levels are produced by brass instruments due to the directional characteristics of mid to mid-high frequencies. Therefore, for brass instruments such as trumpets, trombones, and tubas, microphones should face slightly off of the bell’s center at a distance of one foot or more to prevent overloading from windblasts.

Guitars

Technique for acoustic guitars is dependent on the desired sound. Placing a microphone close to the sound hole will achieve the highest output possible, but the sound may be bottom-heavy because of how the sound hole resonates at low frequencies. Placing the mic slightly off-center at 6 to 12 inches from the hole will provide a more balanced pickup. Placing the mic closer to the bridge with the same working distance will ensure that the full range of the instrument is captured.

Some people prefer to use a contact microphone, attached (usually) by a fairly weak temporary adhesive, however this will give a rather different sound to a conventional microphone. The primary advantage is that the contact microphone performance is unchanged as the guitar is moved around during a performance, whereas with a conventional microphone on a stand, the distance between microphone and guitar would be subject to continual variation. Placement of a contact microphone can be adjusted by trial and error to get a variety of sounds. The same technique works quite well on other stringed instruments such as violins.

Pianos

Ideally, microphones would be placed 4 to 6 feet from the piano to allow the full range of the instrument to develop before it is captured. This isn’t always possible due to room noise, so the next best option is to place the microphone just inside the open lid. This applies to both grand and upright pianos.

Percussion

One overhead microphone can be used for a drum set, although two are preferable. If possible, each component of the drum set should be miked individually at a distance of 1 to 2 inches as if they were their own instrument. This also applies to other drums such as congas and bongos. For large, tuned instruments such as xylophones, multiple mics can be used as long as they are spaced according to the 3:1 rule.

Voice

Standard technique is to put the microphone directly in front of the vocalist’s mouth, although placing slightly off-center can alleviate harsh consonant sounds (such as “p”) and prevent overloading due to excessive dynamic range. Several sources also recommend placing the microphone slightly above the mouth.

Woodwinds

A general rule for woodwinds is to place the microphone around the middle of the instrument at a distance of 6 inches to 2 feet. The microphone should be tilted slightly towards the bell or sound hole, but not directly in front of it.

Stereo (2) Microphone Placement

There exist a number of well-developed microphone techniques used for recording musical, film, or voice sources. Choice of technique depends on a number of factors, including:

- The collection of extraneous noise. This can be a concern, especially in amplified performances, where audio feedback can be a significant problem. Alternatively, it can be a desired outcome, in situations where ambient noise is useful (hall reverberation, audience reaction).

- Choice of a signal type: Mono, stereo or multi-channel.

- Type of sound-source: Acoustic instruments produce a sound very different from electric instruments, which are again different from the human voice.

- Situational circumstances: Sometimes a microphone should not be visible, or having a microphone nearby is not appropriate. In scenes for a movie the microphone may be held above the picture frame, just out of sight. In this way there is always a certain distance between the actor and the microphone.

- Processing: If the signal is destined to be heavily processed, or “mixed down”, a different type of input may be required.

- The use of a windshield as well as a pop shield, designed to reduce vocal plosives.

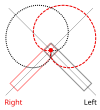

X-Y Technique

Here there are two directional microphones at the same place, and typically placed at 90° or more to each other. A stereo effect is achieved through differences in sound pressure level between two microphones. Due to the lack of differences in time-of-arrival and phase ambiguities, the sonic characteristic of X-Y recordings is generally less “spacey” and has less depth compared to recordings employing an AB setup.

When the microphones are bidirectional and placed facing +-45° with respect to the sound source, the X-Y-setup is called a Blumlein Pair. The sonic image produced by this configuration is considered by many authorities to create a realistic, almost holographic soundstage.

A further refinement of the Blumlein Pair was developed by EMI in 1958, who called it “Stereosonic”. They added a little in-phase crosstalk above 700 Hz to better align the mid and treble phantom sources with the bass ones.

A-B Technique

This technique uses two parallel microphones, typically omnidirectional, some distance apart, capturing time-of-arrival stereo information as well as some level (amplitude) difference information, especially if employed close to the sound source(s). At a distance of about 50 cm (0.5 m) the time delay for a signal reaching first one and then the other microphone from the side is approximately 1.5 ms (1 to 2 ms). If the distance is increased between the microphones it effectively decreases the pickup angle.

The ORTF Technique

It was devised around 1960 at the Office de Radiodiffusion Télévision Française (ORTF) at Radio France.

ORTF combines both the volume difference provided as sound arrives on- and off-axis at two cardioid microphones spread to a 110° angle, as well as the timing difference as sound arrives at the two microphones spaced 17 cm apart.

The microphones should be as similar as possible, preferably a frequency-matched pair of an identical type and model.

The result is a realistic stereo field that has reasonable compatibility with mono playback. Since the cardioid polar pattern rejects off-axis sound, less of the ambient room characteristics are picked up. This means that the mics can be placed farther away from the sound sources, resulting in a blend that may be more appealing. Further, the availability of purpose-built microphone mounts makes ORTF easy to achieve.

As with all microphone arrangements, the spacing and angle can be manually adjusted slightly by ear for the best sound, which may vary depending on room acoustics, source characteristics, and other factors. But this arrangement is defined as it is because it was the result of considerable research and experimentation, and its results are predictable and repeatable.

Mid/Side Technique

This technique employs a bidirectional microphone (with a Figure of 8 polar pattern) facing sideways and a cardioid (generally a variety of cardioid, although Alan Blumlein described the usage of an omnidirectional transducer in his original patent) facing the sound source. The capsules are stacked vertically and brought together as closely as possible, to minimize comb filtering caused by differences in arrival time.

The left and right channels are produced through a simple matrix: Left = Mid + Side, Right = Mid − Side (“minus” means you add the side signal with the polarity reversed). This configuration produces a completely mono-compatible signal and, if the Mid and Side signals are recorded (rather than the matrixed Left and Right), the stereo width (and with that, the perceived distance of the sound source) can be manipulated after the recording has taken place.

Links for Microphone Placements

Mixing a Live Band

Live sound mixing is the blending of multiple sound sources by an audio engineer using a mixing console or software. Sounds that are mixed include those from instruments and voices which are picked up by microphones (for drum kit, lead vocals and acoustic instruments like piano or saxophone and pickups for instruments such as electric bass) and pre-recorded material, such as songs on CD or a digital audio player. Individual sources are typically equalized to adjust the bass and treble response and routed to effect processors to ultimately be amplified and reproduced via a loudspeaker system.[1] The live sound engineer listens and balances the various audio sources in a way that best suits the needs of the event.[2]

Audio equipment is usually connected together in a sequence known as the signal chain. In live sound situations, this consists of input transducers like microphones, pickups, and DI boxes. These devices are connected, often via multicore cable, to individual channels of a mixing console. Each channel on a mixing console typically has a vertical “channel strip”, which is a column of knobs and buttons which are used to adjust the level and the bass, middle register and treble of the signal. The audio console also typically allows the engineer to add effects units to each channel (addition of reverb, etc.) before they are electrically summed (blended together).

Audio signal processing may be applied to (inserted on) individual inputs, groups of inputs, or the entire output mix, using processors that are internal to the mixer or external (outboard effects, which are often mounted in 19″ racks). An example of an inserted effect on an individual input is patching in an Autotune rackmount unit onto the lead vocalist’s track to correct pitch errors. An example of using an inserted effect on a group of inputs would be to add reverb to all of the vocalists’ channels (lead vocalist and backing vocalists). An example of adding effects to the entire output mix would be to use a graphic equalizer to adjust the frequency response of the entire mix.

Front of House Mixing

The front of house (FOH) engineer focuses on mixing audio for the audience, and most often operates from the middle of the audience or at the last few rows of the audience. The output signals from the FOH console connects to a Sound reinforcement system. Other non-audio crew members, such as the lighting console operator, might also work from the FOH position, since they need to be able to see the show from the audience’s perspective.

Foldback

The foldback or monitor engineer focuses on mixing the sound that the performers hear on stage via a stage monitor system (also known as the foldback system). The monitor engineer’s role is important where the instruments and voices on the stage area is amplified. Usually, individual performers receive personalized feeds either via monitors placed on the stage floor in front of them or via in-ear monitors. The monitor engineer’s console is usually placed in the wings just off-stage, to provide easier communication between the performers and the monitor engineer.

For smaller shows, such as bar and smaller club gigs, it is common for the monitors to be mixed from the front of house position, and the number of individual monitor mixes could be limited by the capabilities of the front of house mixing desk. In smaller clubs with lower- to mid-priced audio consoles, the audio engineer may only have a single “auxiliary send” knob on each channel strip. With only one “aux send”, an engineer would only be able to make a single monitor mix, which would normally be focused on meeting the needs of the lead singer. Larger, more expensive audio consoles may provide the capabilities to make multiple monitor mixes (e.g., one mix for the lead singer, a second mix for the backing vocalist, and a third for the rhythm section musicians). In a noisy club with high-volume rock music groups, monitor engineers may be asked for just the vocals in the monitors. This is because in a rock band, the guitarist, bassist and keyboardist typically have their own large amplifiers and speakers, and rock drums are loud enough to be heard acoustically. In large venues, such as outdoor festivals, bands may request a mix of the full band through the monitors, including vocals and instruments.

Drummers generally want a blend of all of the onstage instruments and vocals in their monitor mix, with extra volume provided for bass drum, electric bass and guitar. Guitar players typically want to hear the bass drum, other guitars (e.g., rhythm guitar) and the vocals. Bass players typically ask for a good volume of bass drum along with the guitars. Vocalists typically want to hear their own vocals. Vocalists may request other instruments in their monitor mix, as well.

Broadcast

The broadcast mixer is responsible for audio delivered for radio or television broadcast. Broadcast mixing is usually performed in an OB van parked outside the venue.

Sound Checks and Technical Rehearsals

For small events, often a soundcheck is conducted a few hours before the show. The instruments (drum kit, electric bass and bass amplifier, etc.) are set up on stage, and the engineer places microphones near the instruments and amplifiers in the most appropriate location to pick up the sound and some instruments, such as the electric bass, are connected to the audio console via a DI box. Once all the instruments are set up, the engineer asks each instrumentalist to perform alone, so that the levels and equalization for the instruments can be adjusted. Since a drum kit contains a number of drums, cymbals and percussion instruments, the engineer typically adjusts the level and equalization for each mic’d instrument. Once the sound of each individual instrument is set, the engineer asks the band to play a song from their repertoire, so that the levels of one instrument versus another can be adjusted.

Mics are set up for the lead vocalist and backup vocalist and the singers are asked to sing individually and as a group, so that the engineer can adjust the levels and equalization. The final part of the soundcheck is to have the rhythm section and all the vocalists perform a song from their repertoire. During this song, the engineer can adjust the balance of the different instruments and vocalists. This allows the sound of the instruments and vocals to be fine-tuned prior to the audience hearing the first song.

In the 2010s, many professional bands and major venues use digital mixing consoles that have automated controls and digital memory for previous settings. The settings of previous shows can be saved and recalled in the console and a band can start playing with a limited soundcheck. Automated mixing consoles are a great time saver for concerts where the main band is preceded by several support acts. Using an automated console, the engineer can record the settings that each band asks for during their individual soundchecks. Then, during the concert, the engineer can call up the settings from memory, and the faders will automatically move to the position that they were placed in during the soundcheck. On a larger scale, technical rehearsals may be held in the days or weeks leading up to a concert. These rehearsals are used to fine-tune the many technical aspects (such as lighting, sound, video) associated with a live performance.

Training and Background

Audio engineers must have extensive knowledge of audio engineering to produce a good mix, because they have to understand how to mix different instruments and amplifiers, what types of mics to use, where to place the mics, how to attenuate “hot” instrument signals that are overloading and clipping the channel, how to prevent audio feedback, how to set the audio compression for different instruments and vocals, and how to prevent unwanted distortion of the vocal sound. However, to be a professional live sound engineer, an individual needs more than just technical knowledge. They must also understand the types of sounds and tones that various musical acts in different genres expect in their live sound mix. This knowledge of musical styles is typically learned from years of experience listening to and mixing sound in live contexts. A live sound engineer must know, for example, the difference between creating the powerful drum sound that a heavy metal drummer will want for their kit, versus a drummer from a Beatles tribute band.

Links for Mixing Live Situations

EQ Cheat Sheet

Acoustic Drums

Be careful when boosting top end on close mic’d tracks. This can accentuate cymbal bleed and make the drums sound harsh. If you’re struggling to achieve brightness without bringing up the cymbals, try using gates to reduce the bleed between hits. You can also layer in drum samples (my first choice) and EQ them for brightness without bringing up the bleed.

There are two approaches for EQing overheads. You can either:

- Filter out all the low end and use them as cymbal mics, or…

- Leave them as-is and use them to form the overall sound of the kit

Option #1 will create a separated, sculpted sound that works well in modern genres. Option #2 will lead to a more natural sound that works well for folk and acoustic music.

Try rolling off everything below 40 Hz on the kick. This can often tighten things up.

Since each mic has bleed, you should always EQ drums with all the mics playing together. You can often filter the hi-hat aggressively. Try cutting everything below 500 Hz.

Acoustic drums will often need lots of EQ. Don’t be afraid to boost or cut by 10 dB or more.

Listen to a few modern records and notice how bright the kick is. The key to getting your kick to cut isn’t more low end, but more top end.

Vocals

When boosting top end, listen for harshness. Often times, this will occur when your boost extends too far down the frequency spectrum. If this happens, move the boost higher up or tighten the Q. You can also add a small cut to the upper midrange to counteract any harshness.

High-pass filtering will often be necessary, but it’s not always needed. If you don’t hear a problem, there’s no need to fix it.

You can high-pass female vocals much higher than male vocals without affecting the sound of the voice.

Listen for resonances in the lower midrange.

Bass

To add presence, boost higher than you think. The solution is not more low end, but to bring out the harmonics (start around 700 – 1200 Hz).

Distortion will often do a better job at adding presence than EQ.

Acoustic Guitars

Don’t be afraid to roll off the low end. Often times, all you want is the sound of the pick hitting the strings.

Watch for resonances in the lower midrange.

Electric Guitars

Listen to them with the bass. Often times, you can remove quite a bit of low end. The guitars may sound thin on their own, but with the bass, they’ll sound great.

Watch for harsh resonances in the upper midrange (2 – 4 kHz).

If electric guitars are recorded well, they often need little-to-no EQ. For presence, boost around 4 kHz.

Synths

Your approach should vary widely depending on what you’re working with.

Many modern synths are ear-piercingly bright. Don’t be afraid to roll off top end. This can help them sink back into a mix.

Often times, synths will fill up the entire frequency spectrum. In a busy mix, you’ll often have to whittle them down using high and low-pass filters.

You can be aggressive when EQing synths, because we have no expectations about what they should sound like. This gives you more flexibility than when EQing an organic instrument, where you can’t stray too far from what the instrument sounds like in real life.

Piano

Boosting 5 kHz can bring out the sound of the hammers hitting the strings. This will make the piano sound harder, which can help it cut through a busy mix.